Load Balancing Azure OpenAI with Azure Front Door

Introduction

In the previous entry, we learned how to use API Management (APIM) to load-balance and make the Azure OpenAI Service (AOAI) redundant.

While the previous approach worked to some extent, we found that hacking with APIM policies did not stand up to the complex requirements, such as changing the backend depending on the type of model. After all, a dedicated load balancer is the best choice for this kind of application.

Load Balancing AOAI with Azure Front Door

Azure Front Door

Azure Front Door is one of the load balancers offered by Azure that provides L7 (HTTP/HTTPS) load balancing. This load balancer works globally and can easily load balance AOAI resources deployed in multiple regions. It provides simple path-based load balancing as well as Content Delivery Network (CDN) and Web Application Firewall (WAF) capabilities.

High Level Architecture

The high level architecture to be constructed in this article is as follows.

- The user sends a request to the APIM endpoint.

- APIM authenticates with Azure AD and uses that authentication token to communicate with the backend.

- This time, instead of specifying the AOAI resources directly as the backend of APIM, we will connect to the Front Door with multiple AOAI resources on the backend.

Prerequisites

In the previous entry, it seemed like a lot of work to switch backends based on the type of model. This time, we will use the “Origin group” in the Front Door to separate the backend groups that can be connected to each model. To do so, create the following AOAI resources and deploy the specified models to each resource. The deployment name should be exactly the same as the model. For example, when deploying the “gpt-35-turbo” model, its name should also be “gpt-35-turbo”.

- my-endpoint-canada (Canada East): gpt-35-turbo, text-embedding-ada-002

- my-endpoint-europe (West Europe): gpt-35-turbo, text-embedding-ada-002

- my-endpoint-france (France Central): gpt-35-turbo, text-embedding-ada-002

- my-endpoint-australia (Australia East): gpt-35-turbo. gpt-35-turbo-16k

- my-endpoint-japan (Japan East): gpt-35-turbo. gpt-35-turbo-16k

- my-endpoint-us2 (East US 2): gpt-35-turbo. gpt-35-turbo-16k

Front Door Setup

Now let’s set up the Front Door. Access Azure portal, type “Front Door” and select “Front Door and CDN profiles” that appears. Click “Create” and on the screen that comes up, select “Azure Front Door” and “Custom create” to proceed to the next screen.

First, name the Front Door. You cannot choose a region since Front Door is a global service.

Next, switch the tab to “Endpoint” and click “Add an endpoint”

Name the Endpoint. This is used for determining the hostname of the load balancer.

Next, press “Add a route” to set the default route.

By default, all paths are mapped to this route, so “Patterns to match” can be /* here.

Next, create an “origin group” to be associated with this route. Requests matching the default route just created above will be forwarded to the origin group created here.

Name the origin group and add origins here.

Here, the origin is nothing but an AOAI resource. For “Origin type,” select “Custom” and enter the hostname of the AOAI resource. Use the same value for “Origin host header”.

Continue to click on “Add an origin” and enter all the hosts listed in the “Prerequisites” section at the beginning.

You may be confused because when you press “Add an origin” the previous entry is still there, but this is not a modification of the previous origin, but the entry of a new record. Don’t worry.

Also, uncheck “Enable health probes” on this screen. This functionality is used by the origin group to periodically check whether the back-end origin is working properly as the health check. AOAI resources have no endpoints for health probe and return HTTP 404 for invalid requests. However, Front Door considers only HTTP 200 to be healthy. Later in this entry, we will use APIM’s <retry> policy instead of Front Door’s health probe to ensure redundancy, so that is not a problem.

Now necessary settings are made and origins are added.

Complete the creation of the origin group and return to the previous screen to complete the addition of the default route.

Although there is currently only one route, we will complete the creation of the Front Door profile for now.

Press “Create” after passing the validation.

Next, add an origin group for each model that only some AOAI resources have, such as “text-embedding-ada-002” and “gpt-35-turbo-16k”. When a request is made to a deployment of one of these models, the request is forwarded to the origin group defined here.

Here we create an origin group for the “text-embedding-ada-002” model.

Based on the “prerequisites” at the beginning, select only AOAI resources with the “text-embedding-ada-002” model deployed.

We do exactly the same for the “gpt-35-turbo-16k” model. Here we choose different AOAI resources than before.

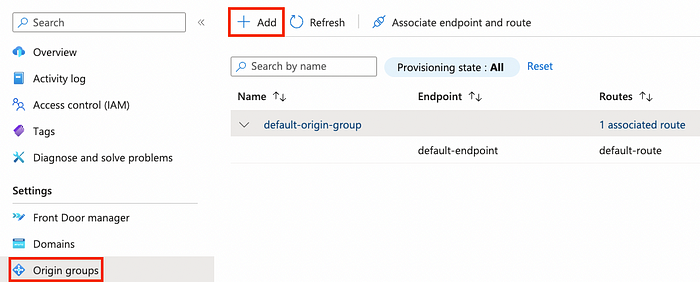

Now that we have finished creating origin groups for particular models, we will also add the corresponding routes. Select “Front Door manager” in “Settings”, hit “Add a route”.

Add a route for “text-embedding-ada-002”.

For “Domains”, use the same one used for the default route. For “Patterns to match”, refers to the AOAI API reference and use the corresponding path. Finally, for “Origin group,” specify the group for “text-embedding-ada-002” that we just created.

Again, do exact the same for “gpt-35-turbo-16k”.

All settings are complete. Note the hostname of the endpoint.

Change the backend of APIM to Front Door

Now that the Font Door has been configured, change the backend that APIM refers to to the Front Door endpoint.

Change the policy as follows:

<policies>

<inbound>

<base />

<set-backend-service base-url="https://default-endpoint-erayfxe3d4bfa5fs.z01.azurefd.net/" />

<authentication-managed-identity resource="https://cognitiveservices.azure.com" output-token-variable-name="msi-access-token" ignore-error="false" />

<set-header name="Authorization" exists-action="override">

<value>@("Bearer " + (string)context.Variables["msi-access-token"])</value>

</set-header>

</inbound>

<backend>

<retry condition="@(context.Response.StatusCode >= 300)" count="5" interval="1" max-interval="10" delta="1">

<forward-request buffer-request-body="true" buffer-response="false" />

</retry>

</backend>

<outbound>

<base />

</outbound>

<on-error>

<base />

</on-error>

</policies>It is extremely simple now!

<set-backend-service> points to the endpoint of the Front Door. <retry condition> is also much simpler. This means that if an error occurs on the backend, we simply query the Front Door again.

Now make a request to the APIM endpoint and verify that the response is returned correctly for all models.

# gpt-35-turbo

curl "https://my-cool-apim-us1.azure-api.net/openai-test/openai/deployments/gpt-35-turbo/chat/completions?api-version=2023-05-15" \

-H "Content-Type: application/json" \

-d '{"messages": [{"role": "user", "content": "Tell me about Azure OpenAI Service."}]}'Verify that retries are working# text-embedding-ada-002

curl "https://my-cool-apim-us1.azure-api.net/openai-test/openai/deployments/text-embedding-ada-002/embeddings?api-version=2023-05-15" \

-H "Content-Type: application/json" \

-d '{"input": "Sample Document goes here"}'# gpt-35-turbo-16k

curl "https://my-cool-apim-us1.azure-api.net/openai-test/openai/deployments/gpt-35-turbo-16k/chat/completions?api-version=2023-05-15" \

-H "Content-Type: application/json" \

-d '{"messages": [{"role": "user", "content": "Tell me about Azure OpenAI Service."}]}'Did you get all the expected responses? Good!

After verifying with the curl command, use APIM’s “Test” to verify that all models are returning responses from the origin group as expected (see previous entry for details). For example, requests for “gpt-35-turbo” should receive responses from all AOAI resources, “text-embedding-ada-002” from Canada, Europe and France, and “gpt-35-turbo-16k” from Australia, Japan and US2.

Make sure retries are working

Finally, let’s confirm that the retry on error used in the previous entry works well in combination with Front Door. Using this entry as a reference, select the “my-endpoint-australia” AOAI resource from the “gpt-35-turbo-16k” origin group and delete the “Cognitive Services OpenAI User” role from the APIM’s Managed Identity. This mimics that there is some trouble with the resource in question.

Several requests to “gpt-35-turbo-16k” always returned HTTP 200 on the surface, but a closer look at Trace showed that HTTP 401 errors occurred when “my-endpoint-australia” was selected as the backend.

Upon receiving an error, APIM’s <retry> policy automatically retries up to 5 times.

When another resource was selected from the origin group, the request succeeded and HTTP 200 was returned as the response.

The current <retry> policy is set to unconditionally retry over HTTP 300. This allows for automatic retries with backend errors and even Rate Limit.

Conclusion

In this entry, we understood an intuitive way to perform back-end load balancing by using a load balancer called Azure Front Door. We also confirmed that this method can easily distribute requests to different origin groups depending on the URL paths. Finally, we confirmed that this approach using Front Door can also be combined with APIM’s <retry> policy to ensure redundancy.

I hope this article inspires ideas for you to configure your AOAI resources using the various services in Azure. Enjoy!